Used your vids as a starting point, but found a much simpler way to go upon things (using the same concepts, though).

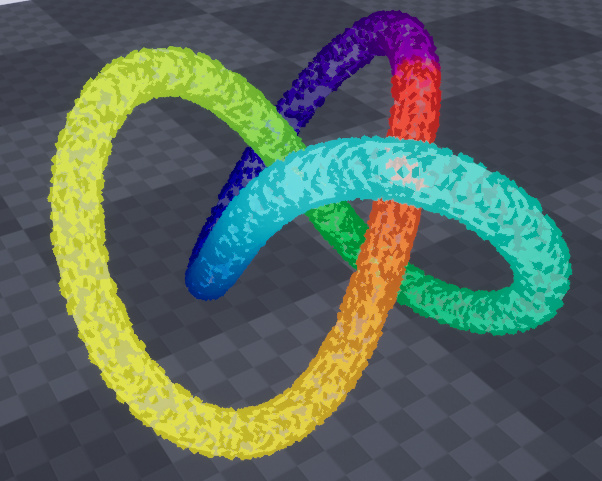

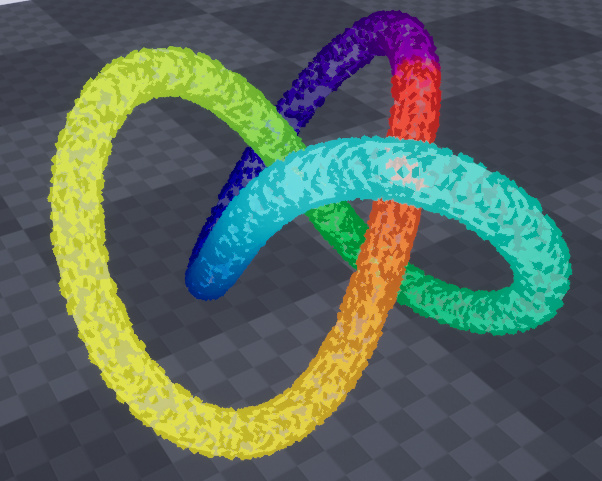

(simple particles on a mesh core with corresponding colors)

Used your vids as a starting point, but found a much simpler way to go upon things (using the same concepts, though).

(simple particles on a mesh core with corresponding colors)

Got it, Thank you, i didn’t have the receive events in the handlers. i didn’t even know those were there, ha.

Niagara early access released

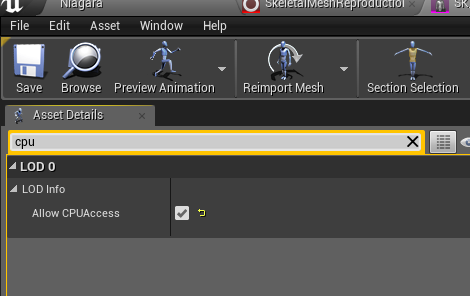

is anybody else having problems with using skeletal meshes as start locations? only some work for me. tutorial_tpp which is included in the engine works for me and the SK_Mannequin from the third person project doesnt.

You sure you have this checked?

My hot take on Niagara after using it for a day now, really dig the Node-based module scripting stuff, would have preferred the module stacking system for emitters to be more like Houdini’s DOP Network, but I understand why it is the way it is.

If I make a lot of changes to an individual emitter in a Niagara System asset, is there a way to “bake back” the changes I made to the parent emitter asset? (the opposite of “Reset this input to the value defined in the parent emitter”). Or even just save off the emitter “as is” as a new Niagara Emitter asset?

Hello, how do you do this subject?![]()

So fun thing I learned today, that might save the rest of you some frustration, setting a Material to be “2-sided”, will cause it to always be displayed as the default grid material if you try to use it with a niagara emitter.

Also using the Particle time node to drive alpha erosion, does the same thing…default grid material.

Just started messing with Niagara today. ![]()

Not sure if any epic devs are still listening here, but I’m gona throw a few questions out.

This could be really usefull if you are debugging something which just happens sometimes and you have a hard time catching it.

I was hoping Wyeth made it to the end of the 3rd room today in the live stream. This is me playing with skelmeshes.

Ive been recently playing with Niagara, and found that a morph setup I have works on the ‘cpu’ but not on the ‘gpu’ in the sim selection in the editor.

Does anyone know the reason?

I’m using normal particles not ribbons or anything else. (I have two scripts one to set the static mesh morph areas and one to update the system) Just wanted to know if their was a reason or if a fix will happen or yeah if anyone had any better insight ![]()

We’ve talked about doing something similar to this for caching complex simulation and for debugging purposes, but it’s unlikely to be something we work on in the short term as there are lots of other higher priorities.

Direct reads from particles is an important feature we’ve been working on. I don’t have an exact time frame that I can give you, but I would expect it to be available within the next two engine releases.

Do you guys have any indication or timeframe on when you would call Niagara production ready? As I know their is a lot of functionality working for 4.20 but still the odd bug etc. Would be really helpful to know ![]()

[Edit; apologies if this information is public somewhere I just cant seem to find any info]

[ Edit 2; The Mill’s incredible Blackbird at GDC : The future of virtual production – fxguide - Looks like the mill produced this tech demo using Niagara for realtime vfx ]

Unfortunately we don’t have an exact time frame that we can commit to for when Niagara will be production ready as there is still quite a bit of work to be done in terms of the feature set, full platform support, and performance optimization. I estimate we’ll be in early access for at least 2 more releases, but possibly more depending on how priorities shift within Epic.

We did work with The Mill to produce The Human Race last year which was the first real public use of Niagara, but it was still very early days for the toolset and runtime. We didn’t even have any of the stack UX built yet, but it was awesome to get external feedback and we started to see the awesome kinds of things that FX artists would be able to do once they got their hands on it.