Love that purple explosion. Really high quality effect

This approach sounds really innovative. I’ve been working on achieving a similar effect recently and ran into the same issue. I’m still having trouble fully understanding it—does using NDC Data mean we no longer need to sample RT in real-time? If possible, could you please share your project file or maybe make a video tutorial? I’d really appreciate your help!

This looks like a fantastic solution! Congratulations on coming up with that approach, that’s really impressive!

Do I understand it correctly that the blur manager would have to be active all the time (or at least as long as one or multiple blurs are active) and act as a listener to the datachannel?

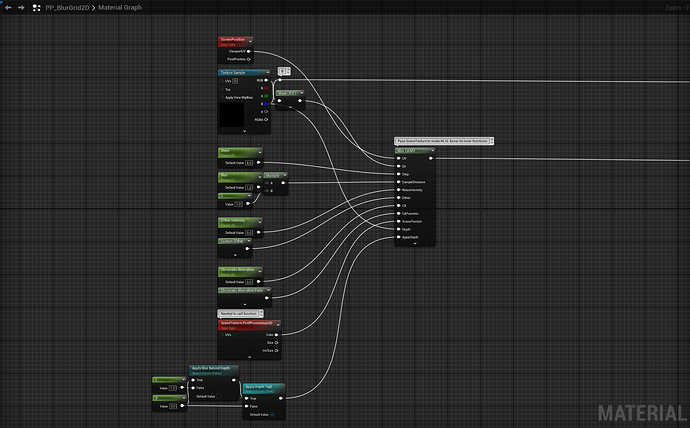

And would you mind sharing a screenshot of your postprocess material setup? I can’t quite figure out how you use the motion vector data to setup the blur.

Anyways, thanks a lot for sharing your approach!

Hey, sorry for the late reply!

Yes, you still use a Render Target in this method, but for something completely different.

The key difference from the Scene Capture method is what gets stored in the Render Target:

- With Scene Capture, you save the full scene color (including translucency) to a texture — it’s a snapshot of what’s on screen. Then, you blur that snapshot.

- With this new method, you don’t store the scene itself. Instead, you store vectors (motion direction + intensity) for where and how to apply the blur. The actual full scene color is already available inside the postprocess pass, so you just need to tell it where to blur. That’s exactly what the Blur Manager computes processing the NDC data received.

I’m probably releasing this and other blur methods soon in gumroad if you are interested ![]()

Thank you, really!

Yes, you understood that perfectly! Since the manager is responsible for encoding the motion vectors, it needs to stay active as long as any active blur instance exists.

Yeah, this is pretty much my setup.

Motion vectors are encoded in 2D, in screen space. Rather than computing the directions manually using the UV coords and a center like in a traditional radial blur function, you use the precalculated vector data stored in the render target texture by the manager.

In my case, I also use channel B to pass depth data and perform depth testing and depth fade.

Thank you so much for the reply!

I will absolutely get this as soon as you release it on gumroad, I am looking forward to digging deeper into this ![]() would you mind sending a link to your gumroad stuff so that I won’t miss it?

would you mind sending a link to your gumroad stuff so that I won’t miss it?

Thanks a lot and have a good day!