Hey! Many moons ago I started a thread on tech-artists.org where I wanted to collect useful realtime vfx knowledge in one place. It started out as a dictionary, but then I started collecting reference dumps, discussions from the Facebook group and so on. I was approached by some of the fine people on this site with a question if we should revive this project here in our shiny new forum. I am 100% on board! I’ll start by bringing the old thread over. Then you can fill in what else you think should be in here. I’ll keep updating this post with your suggestions and edits. It might look like an unsorted mess to start with, but with time I think this could turn into something great! We just need to distill all the delicious infromation found below to something a bit more digestable. Any volunteers? Looking at you @Keith and @Weili.Wonga Somewhere down the line, perhaps this could be turned into a wiki and/or free book that could help new vfx artists out! wipes tear from eye. Anyway, here goes!

Edit: It seems I hit the wordlimit. I’ll have to split the post and grab a few of the following posts so I can update down the line.

====================================================================================

Alpha Erosion

Description: Scaling the the levels of the alpha texture to give the appearence of the alpha eroding, or being eaten away.

Other names: Black Point Fade, Alpha Dissolve, Alpha Min/Max Scale.

Bent sprite

Description: A method of improving the lighting on a sprite. Achieved by bending the normals away from center.

Other names: Benticle

Billboard

Description:A billboard is similar to a sprite but it’s not simulated and is usually constrained to only rotate around the up axis.

Other names: VAP (ViewAlignedPoly)

Camera Parented Effects

Description: A particle system that has been parented under the camera so it follows along with the player.

Other names: Camera proximity Effects, Screen attached effects

Depth Fading Particles

Description: The particles pixelshader performs a depthtest against the scene and fades the alpha accordingly. This gives the particle a soft look when intersecting with objects.

Other names: Soft particles, Z-Feathered particles, Depthtest particles.

Distance Culling

Description: A way to stop the particles from simulating/rendering after a specified distance.

Other names: Cullradius

Distance Fade

Description: A way of fading the alpha of particles based on their distance from the camera. This could be used to stop particles from clipping the screen.

Other names:

Emitter

Description: The source of particles. In most engines this is what contains all the data that determines how the particles simulate. What data is present at this level varies. Some engines have all of the settings here. Some engines have an effect container above this with additional settings. The effect container usually allows you to combine several emitters to create the effect. Example: An effect container would contain a fire emitter, a smoke emitter and a spark emitter. The spark emitter would contain data like, texture, colour, initial velocity and so on.

Other names: Layer

Flipbook

Description: A sequence of textures compiled to one image. The pixelshader on the sprite moves the UVs to the different sections of the image and thus displaying different frames of the sequence.

Other names: Animated Texture Atlas, Spritesheet, SubUV Texture.

Common Naming Convention:

_texname_atlas = Non related frames used for random texture selection. _

texname_anim = An animation that has a start and end.

texname_loop = An animation that repeats indefinitely.

Force

Description: A force affects the velocity of a particle after it’s been born. This can be used to simulate wind, turbulence and attraction.

Other names: Attractor (Basic version of force)

Mesh Particle

Description: A polygonal mesh instanced onto the particles.

Other names: Entity particles

On Screen Effects

Description: An effect that plays on the players screen. This could be particle or shader driven. An example would be blood splatter on the camera.

Other names: Camera space effects, Fullscreen effects

Particlesystem

Description: A collection of emitters making up an effect.

Other names: Effect Container

Particle trail

Description: A particle rendering method where a quad strip is constructed from

the particle data.

Other names: Trail, Geotrail, Ribbon, Swoosh, Billboardchain

Preroll

Description: A method of running a particle system for a set amount of time “behind the scenes” so when it’s first made visible, it’s already in place.

Other names: Prewarm

Sprite

Description: The most basic tool of a vfx artist. In it’s simplest form it’s a camerafacing quad with a texture applied to it. There are countless variations to this with different alignment options but then one could argue that it’s a quad rather than a sprite.

Other names:

UV Distortion

Description: A method of distorting the UV coordinates to extra generate detail in an effect. Can be extended to be driven by a Motion Vector mask to blend smoothly between frames.

Other names: UV Warping

====================================================================================

Discussion about creating Rain:

Fill rate is your biggest enemy. We had a programmer build a culling system to keep the particle count fixed. Building a single post process would be very challenging. - William Mauritzen

We use a combo of particles, screen effects, and at base, a stateless screen attached, curve animated system of what I’ll call planes (very programmatically created and optimized) to form the largest quantity of raindrops.

I think most of this depends on what you want to do with it. Putting multiple drops in a single card will cut back your particle counts, probably increase your overdraw, but will also allow you to create a heavy storm much more easily than otherwise.

Is there a run-time interactive component like Watch Dogs slow-mo? If so, that changes things completely too, etc etc etc. - Keith Guerette

You can look at the Tricks of Seb lagarde here: http://www.fxguide.com/featured/game...onments-partb/ -Marz Marzu

We don’t use “real” particles for rain but instead have a box around the camera that is filled with drops through shader trickery. It reacts to camera movement so the faster you move the more they streak and pretty much all the settings are editable (size of rain drops, size of camera volume, alpha, brightness, camera-movement-influence and so on). For specific areas where you’re under a roof looking out we place drip effects to simulate water dripping off the edge. It’s not the best way to do it but it works well enough. In the future we want to get better distant rain, maybe using sheets with scrolling textures to get the dense, heavy rain look. Would also be nice to have splashes on the ground but they’ve been too costly in the past (and you don’t really see them when you’re driving at 120mph)… - Peter Åsberg

We do it with particles parented to the camera. Single drops closest and mutiple per plane further away. Then we have some particles playing on the camera - Andreas Glad

====================================================================================

Discussion about color remapping:

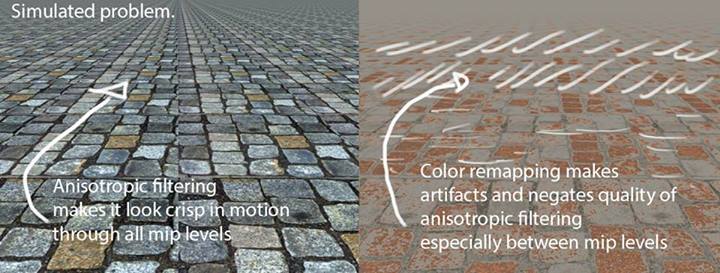

Trying to get color remapping outside of fx and the aliasing artifacts it is introducing are making it unusable. Has anyone used the technique to make color maps for lit objects without breaking the performance bank to smooth out the artifacts? Has anyone heard of the technique being used for non alpha surfaces other than L4D 2 hoard? I don’t necessarily need a solution just trying to sort out if this is even worth chasing. - Mark Teare

Try using uncompressed grayscale maps for your remap textures if possible. DDS is pretty brutal with the gradient maps for color remapping. - Matt Vitalone

I’m a little unclear what you are doing. Are you using the brightness as a UV for a gradient texture? If so, I find it helps to use a small texture. It seems counter intuitive, but if you have a large texture you will get stepping due to the fact that it is only 8 bits per channel. If your gradient crosses enough texels, you will end up with more than one pixel in a row with the same value (producing a stair step). If you have a small texture, each texel will have a different value and the interpolation will create a smooth transition. - Eben Cook

Right now what I am doing is taking a gray scale version of an artists color map and recoloring it with a gradient texture. So there is the possibility for artifacts from the gradient compression but the real ugly stuff is coming from the fact that the pixel in recolored after all of the filtering our engine is doing to get the onscreen color value (I am paraphrasing my graphics programmer). If you put the original color texture next to the remapped gray scale one in game and move around you get all kinds of noise especially when moving between mip levels. - Mark Teare

If you’re in unreal you can try various compression settings. Make sure your grayscale isn’t SRGB. Accept full dynamic range. Choose a different compression setting. There’s also a way to stop if from mipping. Choose the other filter setting and you’ll see more pixelation but less weird lerping. - Wiliam Mauritzen

Are you guys using this technique on a lot of lit surfaces? - Mark Teare

You’ll also want to make sure your LOD group isn’t smaller than your imported size.

I did a color remap that allowed for twenty slots of customization. We couldn’t have any non-adjacent colors and we had to hide seams. - William Mauritzen

We tried using it on a project and we were able to minimize the aliasing by using really smooth color ramps (not too many colors and no sudden changes) but the colors still smeared into each other a bit too much when viewed at a distance. That didn’t produce any flickering, but we would lose a lot of detail when objects were far away - Arthur Gould

Do you have an example to the artifacts you are getting for us Mark? - Edwin Bakker

Made a generic example illustrating my problem (details in my last post). I tried all of the suggestions below to lessen the problem (all of which were good tips BTW thanks) but in the end there is still fairly bad artifacts stomping/swimming all over the Anisotropic filtering when the texture is recolored using the gradient. I can try to get programming to fix this but at first glance the programmer was afraid a fix would be too costly performance wise and still not look as good. Has anyone solved this particular problem? Cheaply? - Mark Teare

Hmm… So did you try and clamp the color ramp U?

I assume you do not want the light grey areas, which I think come from the filtering between the pixels on U coordinates 0 and 1. The sample errors will get worse as the mipmap texture gets smaller. - Edwin Bakker

Well this image is just bogus Photoshop stuff so don’t read too much into it. Yeah we clamp the U. As I understand it the issue is when sampling from a colored texture the filtering has the color information but when remapping the filtering is done on the gray scale image and then the coloring is added negating the quality of the filtering.

Edwin have you shipped remapping in a scenario like this? Flat-ish tiling surface? -Mark Teare

I am afraid I have not, what happens if you use an uncompressed greyscale instead? - Edwin Bakker

What hardware/shader language is this for? It’s possible that you’re not getting good gradients for the color lookup. General gist: you get good gradient info for the greyscale texture lookup, since it’s using UVs the normal way (the amount the UV changes between each pixel is the gradient, when it’s smooth you get nicely filtered/aniso/etc. the magnitude of the change between pixels tells the gpu which mip to use). But then, think about the rate of change of the next texture lookup: it depends on greyscale between adjacent pixels, which can vary widely and change the mip you are using widely between each group of 2x2 pixels, producing inconsistent seeming results. There are related problems when doing texture fetches inside of branches (i googled and found this example: http://hacksoflife.blogspot.com/2011…l-texture.html).

So two suggestions for you: if you look at the un-remapped image, do the artifacts show up when there whereever there are high-contrast edges in the greyscale (this would suggest it might be what I rambled about). You could try to use a small color lookup texture with no mips (or maybe 1 mip) to see if you can mitgate it. But like always, running with no mips can cause it’s own headaches and shimmery problems. - Peter Demoreuille

Ah we got an expert on the field, I’ll resort back to drinking coffee. - Edwin Bakker

====================================================================================