That’d be awesome! All the stuff I’ve found is like “here’s a 2 hour tutorial on texturing a spaceship”

Alright, regarding the normal generation, I think I might be onto something. Did a lil prototype inside substance and will try it out in unreal tomorrow:

Ok, I got something. Beware 7mb GIF:

https://dl.dropboxusercontent.com/u/10717062/Polycount/FX_Liquid_01.gif

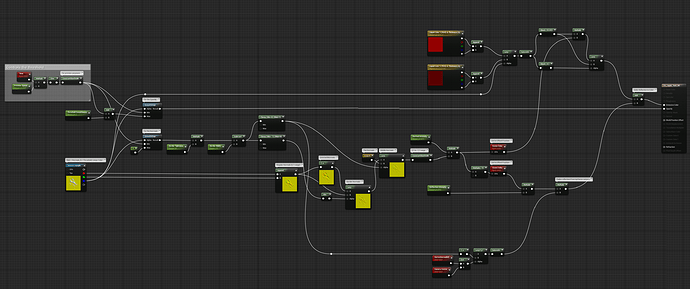

Shader network - It’s an unlit translucent material, everything is fake, physically incorrect

All the files are in a lil pack here:

Wow! Nicely done.

You are ninja.

Legendary! Thanks for all the help everyone! ![]()

Hi Matt, how do you do it with ddx and ddy? I never played with that before and trtied appending them together, while it looks convincing in some cases, if you drive the intensity up you start to notice that the normals are pointing in the wrong direction. It did get super aliasy as you mentioned so I had to fade them over distance.

Dude I didn’t know about fine! That’s awesome!

@Bruno Any mipping issue from what you could see?

ddx(variable)

Variable can be anythingv

As long as it’s a float!

Well, that’s not true, but its rare that derivatives will give you anything useful when used with integers or bools since the derivative will be quantized too, and feeding in a uniform or constant may return junk data in some cases.

Hey Bruno,

This is incredible. I was wondering if you still had your files available? I would love to have a peak if possible.

Still available, but the link was broken since Dropbox eliminated the public folder. Updated the post with the correct link!

Hey Bruno, do you have the instruction count for that setup handy? I’m working on another way to generate threshold normals, but I’d like to compare notes and see which is the cheaper option. I’m generating mine of a grayscale texture with no normals packed in.

Working off of my smooth step function (same as yours) my method is 17 extra instructions to generate normals, vs just a test append of the smoothstep to get a normal map.

Hmm I don’t really, haven’t touched that in quite a while. Are you generating normals by sampling the neighboring pixels of the threshold map?

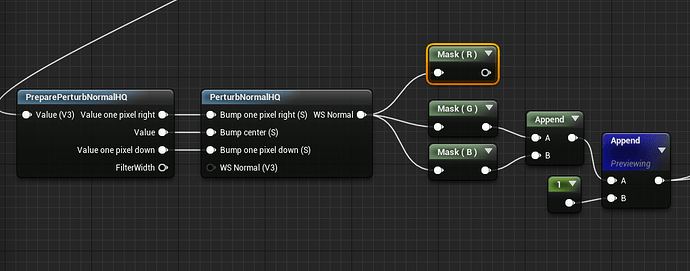

I’m currently using this setup that Klemen is in this little demo

Right. I’d assume that using a texture instead of calculating on the fly will almost always be cheaper, especially in my case since I’m using a single texture sample. I’m not very familiar with the perturbNormalHQ function though… Sorry!

No worries! I’m testing it out since I usually pack different textures in my RGB channels, and I need a way to make some for grayscale textures that erode out. I’m happy with the outcome so far, but I’m unsure if there’s a cheaper method to achieve what I need.

A caveat with the PerturbHQ setup that I found though, it outputs a blue and green channel (no red), so if anyone is looking into using this setup, you have to mask out and append stuff. Also the input for PreparePerturbNormalHQ says Vector 3, but it gets angry if you actually try to use a V3 ![]() It seemed to work just fine with my masked out green channel from my texture…

It seemed to work just fine with my masked out green channel from my texture…