Hello! ![]() First of all, i want to say a few things: I’m not a native english speaker, so i apologize for any mistakes; and i’m a total newbie in VFX and in forums (this is my first forum post ever!), so sorry if this post is unnecessarily long or lacking something.

First of all, i want to say a few things: I’m not a native english speaker, so i apologize for any mistakes; and i’m a total newbie in VFX and in forums (this is my first forum post ever!), so sorry if this post is unnecessarily long or lacking something.

Well, as I said, I’m a total newbie in this field, so I stumbled upon an issue while trying to make a basic additive surface shader following SirHaian’s tutorial on making sparks in Unity.

My problem happens when adding the particles to the scene. After creating the particle system and adding the texture I previously made in Photoshop, the particles appear with a black background, even though I managed to follow the steps of the tutorial for creating an additive shader correctly (or at least I think I did ![]() )

)

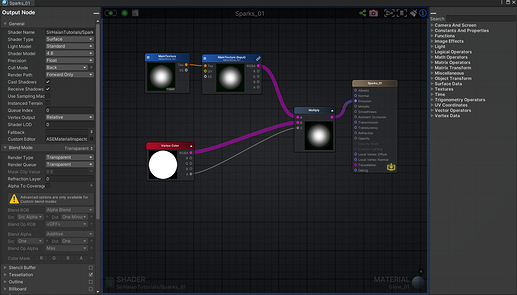

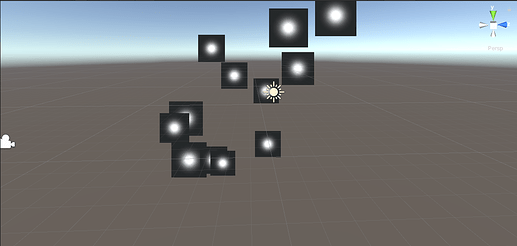

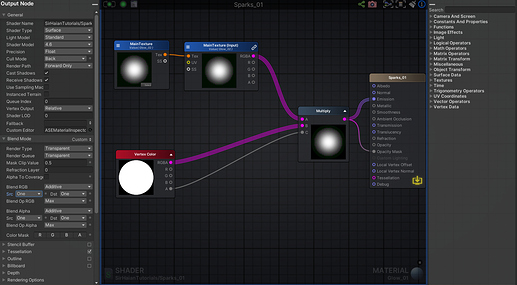

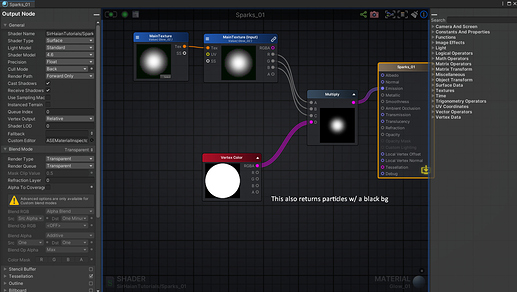

My first ASE settings when i encountered the issue were these:

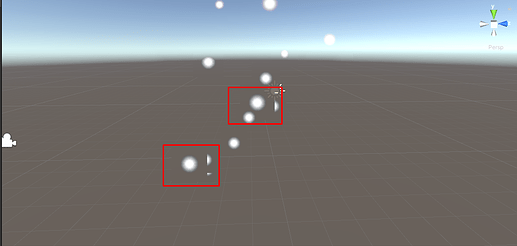

The results i was getting from these settings were these:

Then, after a really long time messing with some parameters, i figured a way to make the background of the particles transparent, but not quite the way i wanted it to be.

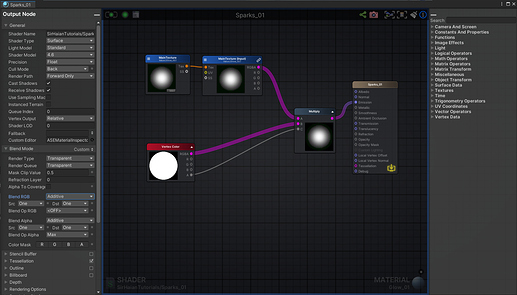

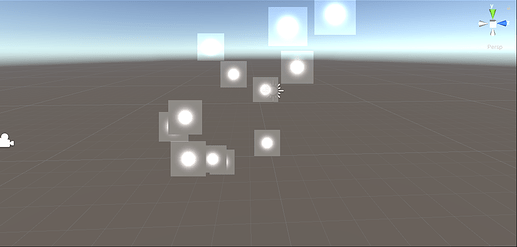

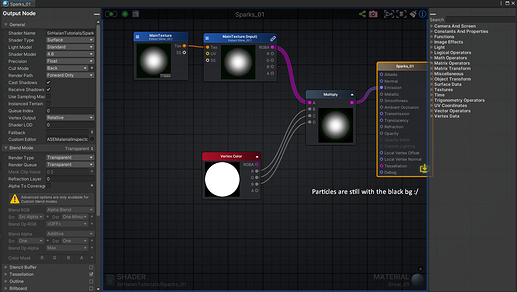

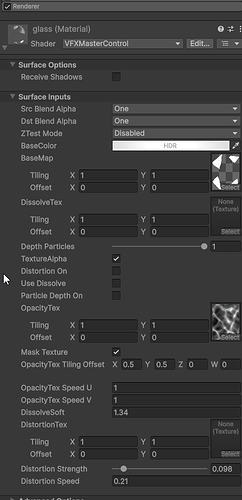

These were the settings i used:

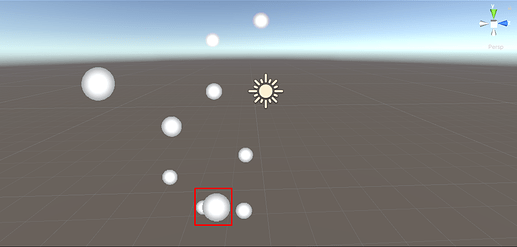

And these were the results:

Turning the “Blend Op RGB” setting to “Max”, i achieved this result:

The particle’s background may seem transparent, but if you look closely inside the rectangles i drew, you can see that the background’s outlines in each particle is still blocking other particles from the view.

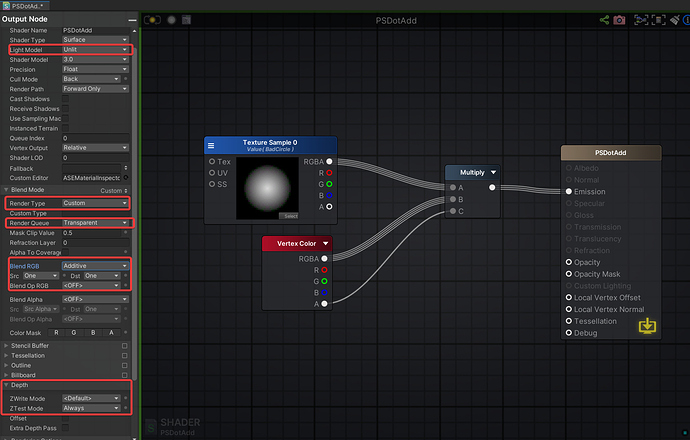

I then managed to get this problem (half) solved by applying these settings:

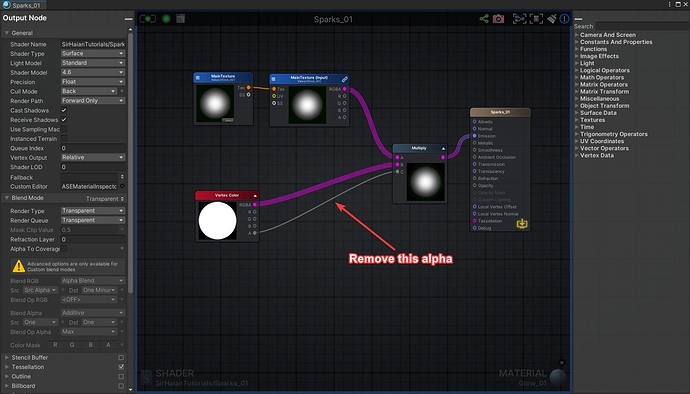

Which gave me this output:

Now, the background of each particle is transparent, but the particles themselves now have rough edges, no glow and are fully opaque - this is not quite the effect SirHaian achieves on his tutorial

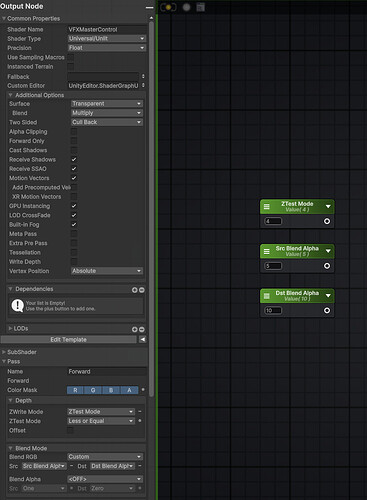

So, I want help to make the particles have the glow intended by the texture, and to make them see-through objects, like SirHaian’s. I also tried to add an alpha channel to the texture, but to no avail in achieving the effect i wanted. Thanks for your attention, and please let me know where i’m going wrong here! ![]()