Stencil Buffer Test with a small extra detail: Elements can leave the stencil area.

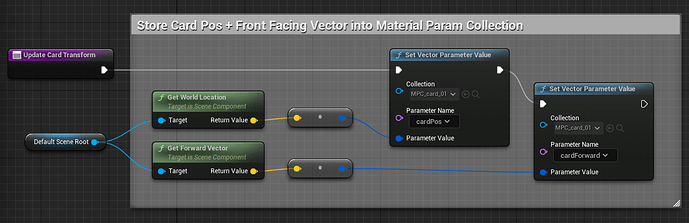

This is done by adding everything in front of the card to the stencil mask. For this to work I store pos + front facing vector via Blueprint:

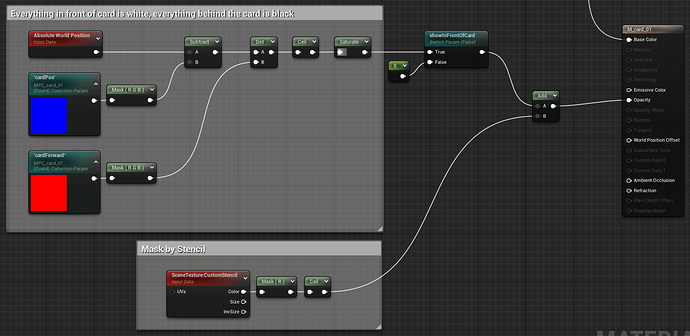

In the material I then mask out the front/back area of the card with a simple dot product:

Little list of inspirations for later:

- https://twitter.com/Lasphir/status/1618298181154115585

- https://www.artstation.com/artwork/WK5JYQ

- https://www.artstation.com/artwork/L3d6Yr

- https://www.artstation.com/artwork/nE5WL6

- https://www.artstation.com/artwork/8wg2aq

- https://www.artstation.com/artwork/9mvAky

- https://www.artstation.com/artwork/3oA9YA

- https://www.artstation.com/artwork/8wgXYx

- https://www.artstation.com/artwork/OokRVK

Dragon Breath:

- XL18 Flamethrower!!! - YouTube

- Lost Ark Monster Kaishtter Skill FX - YouTube

- Unreal Engine 4 - Dragon Breath Fire Effect - YouTube

- Dragon Breath Making of - YouTube

- https://www.artstation.com/artwork/Arleo5

- https://www.artstation.com/artwork/oVmmJ

- Game of Thrones - Season 7 | Breakdown Reel | Image Engine VFX - YouTube

- https://www.artstation.com/artwork/Len4aK