You’re right. In that video it does look like they’re mesh based rather than sprite based. They’re then probably mixing meshes with normal mapped sprites as fill.

Yeah. All the non killing bloodsquirts seem to be spritebased so it’s probably all mixed together. Either way, a great effect!

60hz? Damn, our minimum is 90 0_o we are pretty much using extensive flipbooks for most complex stuff to help avoid overdraw

Yeah, as far as I know almost all of the PSVR titles / experiences from “AAA” devs are 60fps reprojected to 120hz. Batman, Tomb Raider, Jackal Assault, Driveclub VR, RE7, all run at 60fps. UE4 based games didn’t even support 90fps on PS4 as an option until recently. Ubisoft’s Eagle Flight is the only exception I’m aware of, though it’s possible Rigs runs at 90 too. Most of the Unity based projects run at 90fps (like Wayward Sky, Job Sim, and Headmaster), as well as the games using custom engines (Thumper, Super Hypercube). The only 120fps native titles I know are first party Phyre engine based ones, like Robot Rescue. 120 and 90 feel great, and our experience was 60hz wasn’t good enough, especially for hand tracking, but 90hz is hard and requires giving up a lot of visuals. Robot Rescue only hit 120hz by running at a greatly reduced resolution.

BTW they have used sim-baked mesh sequence from RE5 mainly as blood splash.

This is article(Japanese) of RE6.

Is possible to get english version? Thanks

I have tried this workflow.

One small thing I found that might be fun to discuss is the lack of Motion blur between sequence frames when using simple mesh-swapping

I thought about a possible solution but I’m not sure if it would work.

I would bake the velocity per vertex on the mesh as RGB (xyz). This is very common in post production, it allows motion blur to be edited in the Render or in the Composite. Baking vertex velocity has to be done to each mesh in the sequence. Need to store the velocity direction on each vertex in the meshes.

Then in the real-time engine, the baked vertex colours (velocity data) from the meshes should be applied to the Velocity render pass somehow? This is the step I’m not sure how to do. Would this work in principle?

edit: typo

Yeah, it should work. The motionblur is just a post process in most engines so if you just send the vertex color/velocity to the scene texture velocitymap, you should be golden. Though, I haven’t seen an engine that allows you to send vertex colors to the scenetexture out of the box.

You haven’t seen a program that allows you to render vert colors as unlit emissive? ![]()

I don’t get it?

To affect the motionblur, you’d need to be able to send the vertcolors to the velocitypass in the scenetexture. Is that possible nowadays? I haven’t used that side of unreal for a while so maybe there’s an easy way of doing so that I’m missing.

sorry, i misread and stopped at “scene texture”

bit much coffee this morning

and five hour energy

It’s relatively trivial to do for Unity, and Unreal just added support for custom velocity vectors for vertex animation that might be abuse-able for this. The hardest part for Unity is batching would break this, and particles are always batched, so you’d have to bake the vectors into the vertex tangents to ensure they get properly transformed.

@Alcolepone I’ve uploaded my booth presentation where I show how to make static versions of this sort of thing. To make a sequence, you use the same network, just export more frames and play them after eachother. It’s not a straight up tutorial, but all steps are shown and it comes with example files.

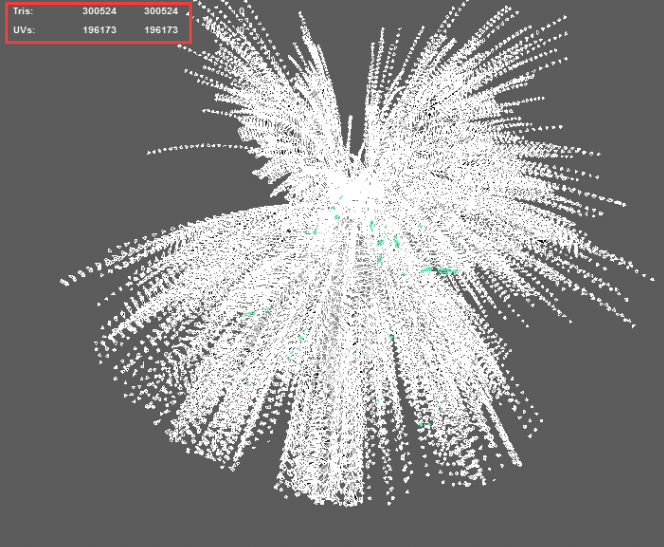

I simulated a mesh sequence in real flow and tried to bring them into unity. Even after face reduction in maya the face count is still high.

It’s a 41 frame sequence and now the total face count is about 300k , 2% of the origin.

I trid to reduce more face but it wont keep the shape any more.

Is there any suggestion about how to contron face count of the sequence.

Bake it down to a vertex shader texture so you only have one frame as a mesh, and then the rest stored as positions in a texture.

Realistically, storing 41 frames of a simulation isn’t going to give you a detailed enough result once it’s reduced down to acceptable levels.