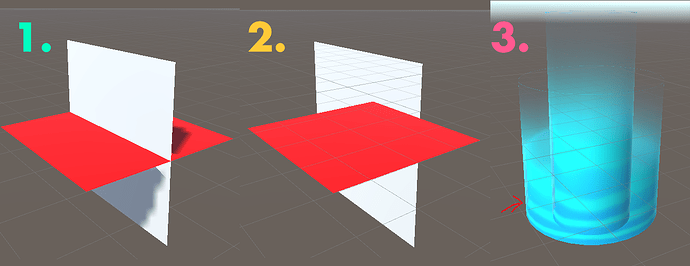

Hi! I’m having trouble intersecting two quads or 3d models in unity. If the material is set to Opaque, there is no problem at all. Look at pic1 for the result.

However, just set the same material to transparent and the weird stuff starts happening: pic2.

Always either one of the squares is completely in front of the other. It depends on the distance between them and the camera. I’ve learned to tweak it so I can choose which material is painted over (for example decreasing the render queue number of the lower) but I’m not able to obtain a cut between the two. I’m almost sure this has something to do with how the z-buffer works but I couldn’t find any good workaround.

There must be a way of doing this, since it is a very common feature in video games, specially for vfx (or just think of rainbow circuit in Mario Kart!). See what happens when trying to create a simple vfx of two cylinders one inside the other: pic3.

In this case, the inner cylinder is showing on top of the outer one and also the back face is doing weird things when rendered (look at the red arrow).

You can easily reproduce this with two quads in a new scene. Just create a new material and set it to transparent. I’m using the standard render pipeline but I think this also happens in the URP.

Thanks for the help in advance!